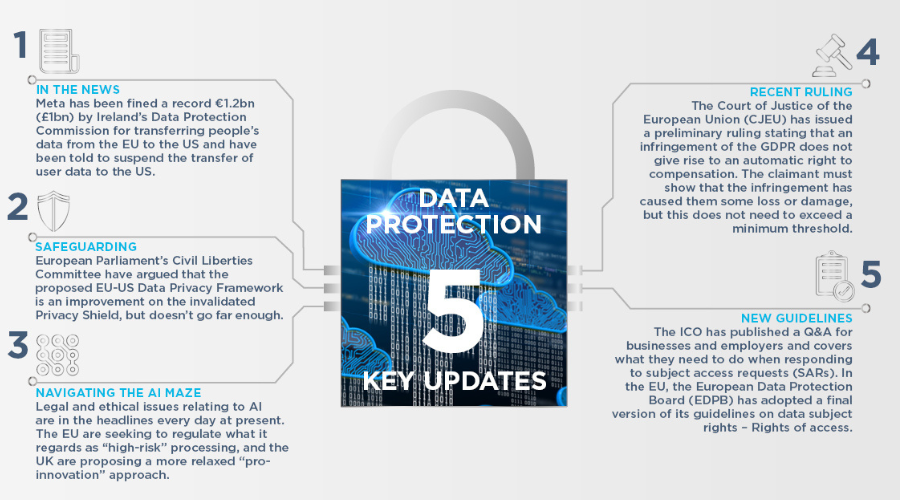

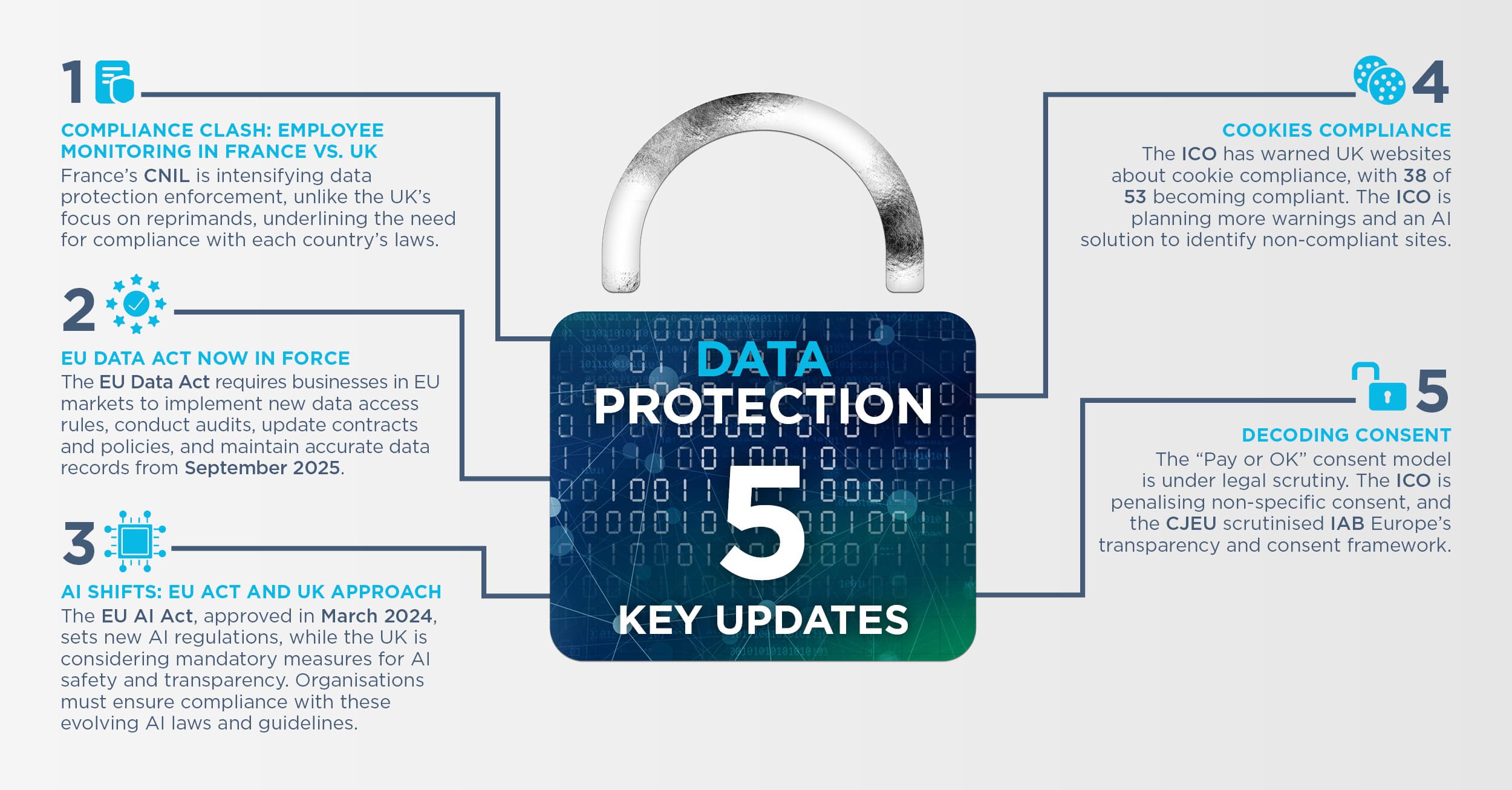

Click on the links below to read more:

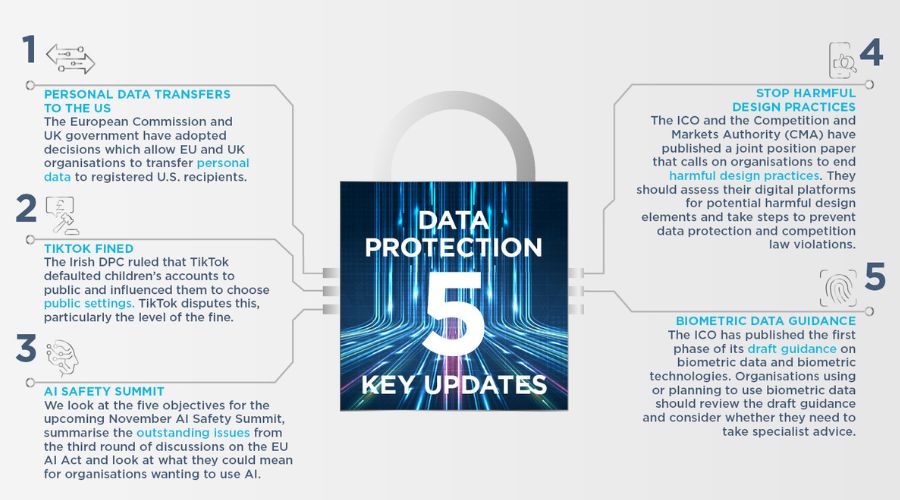

Data protection enforcement and employee monitoring in France and the UK

On 16 February the CNIL (the French data protection authority or DPA) published the 2023 assessment of its enforcement action. It imposed 42 fines totalling nearly €90 million, 168 formal notices and 33 reminders of legal obligations. The report states that the number of sanctions is increasing, due to the combined effect of the CNIL's "simplified sanctions" procedure, an increase in complaints, and increased cooperation between the EU DPAs. The "simplified sanctions" procedure enables the CNIL to take action more quickly and easily in straightforward cases and impose an injunction or a fine no higher than €20,000.

In contrast, the UK ICO's enforcement strategy has been criticised for focusing on breaches of the Privacy and Electronic Communications Regulations (PECR) which govern direct marketing, rather than UK GDPR breaches, and relying on reprimands rather than fines, particularly for public sector bodies.

In January 2024, the CNIL fined Amazon €32M for excessively intrusive monitoring of employees' activity and performance and using video surveillance without providing sufficient information and putting in place sufficient security. The CNIL found that these activities breached GDPR's requirements relating to data minimisation, lawful basis, transparency and security. In the UK, the ICO has recently published draft guidance on monitoring workers and ordered Serco Leisure and associated leisure trusts to stop using facial recognition technology and fingerprint scanning to monitor workers' attendance.

So what?

- Organisations operating in France need to be aware of the CNIL's enforcement strategy, including its "simplified sanctions" procedure, meaning that the risk of a fine for breaching GDPR is higher than in the UK.

- Organisations operating in France and/or the UK which monitor their workers need to conduct this monitoring in accordance with data protection law. The ICO's and CNIL’s guidance sets out guidelines on how to comply with the law, including transparency, together with additional considerations for organisations using biometric data for time and attendance control and monitoring.

EU Data Act now in force: what do you need to do?

The EU Data Act came into force on 11 January 2024, with most of its provisions applying from 12 September 2025. It applies to businesses within its scope operating in EU markets, even if they are not based in the EU. It introduces new requirements governing access to and reuse of data (including non-personal data) related to connected products and related services.

So what?

Organisations must:

- carry out a scoping exercise to assess whether they fall under the scope of the Data Act;

- if so, conduct an audit, a compliance gap analysis and risk assessment to prepare to implement the changes required by the Act;

- review the data that they process to identify any that will not need to be shared because it is protected by other laws, eg trade secrets or intellectual property;

- ensure that their devices and services are designed and manufactured to make the data accessible to the user by default and free of charge, in an easy, secure and direct manner to satisfy the new "data accessibility by design" obligation;

- review and update their standard contracts, terms and conditions and privacy notices to align with the new obligations and requirements imposed by the Act, including making data available under fair, reasonable and non-discriminatory terms and conditions and in a transparent manner;

- amend contracts to ensure transparency on international access and transfers and put in place appropriate safeguards to prevent unlawful third-country governmental access to and transfers of non-personal data;

- update internal policies and procedures to ensure compliance with the Act and to establish processes for handling data from connected products and related services; and

- maintain accurate records of data relating to connected products and services so that they can demonstrate compliance.

AI update: The EU AI act and the UK government consultation response

The EU AI Act

The start of 2024 has seen two key updates in relation to AI regulation: following the EU institutions reaching political agreement on the AI Act in December 2023, it has now been approved by the European Parliament on 13 March 2024. The Act is expected to be finally adopted before the end of the legislature. It will then be published in the EU official journal and come into force 20 days later. Most of its provisions will become applicable 24 months after the date on which the Act comes into force, except for the following provisions:

- bans on prohibited practices, which will apply six months after the entry into force date;

- codes of practice (nine months after entry into force);

- general-purpose AI rules including governance (12 months after entry into force); and

- obligations for high-risk systems (36 months).

UK government response to AI white paper consultation

DSIT published the government's long-awaited response to the consultation on its AI white paper (which it published in March 2023) on 6 February. The government's position remains that it does not intend to introduce legislation to regulate AI at this time, but some mandatory measures will ultimately be required to address potential AI-related harms and ensure public safety. The government will not rush to legislate but will evaluate whether it is necessary and effective to introduce a statutory duty on regulators to have due regard to the AI principles. It is exploring if and how to introduce targeted measures on developers of highly capable general-purpose AI systems related to transparency requirements (for example, on training data), risk management, and accountability and corporate governance-related obligations.

Other key points include:

- The government has written to a number of regulators impacted by AI (including the ICO) to ask them to publish an outline of their strategic approach to AI by 30 April 2024.

- The government will formalise its regulator coordination committee, including by establishing a steering committee, by spring 2024.

- DSIT will provide updated guidance in spring 2024 to ensure the use of AI in HR and recruitment is safe, responsible and fair.

- DSIT is working closely with the Equality and Human Rights Commission to develop new solutions to address bias and discrimination in AI systems.

- Later this year, DSIT will launch the AI Management Essentials scheme, setting a minimum good practice standard for companies selling AI products and services. It will consult on introducing this as a mandatory requirement for public sector procurement.

So what?

- Organisations putting AI tools on the market or deploying them in their business need to carry out an audit to identify whether they will be subject to the EU AI Act, in particular the prohibition on "unacceptable risk" AI systems, which is due to become applicable later this year.

- Organisations operating in the UK will not have to comply with additional legislation in the short term, but must comply with relevant laws already in force, including the UK GDPR, and should review the ICO's guidance on how to implement AI in compliance with data protection law.

- They should also monitor the progress of the UK Data Protection and Digital Information Bill, which amends the law on automated decision making.

Update: The ICO cookies warning

In the January 2024 issue of Data Diaries we wrote about the letters that the ICO sent the operators of some of the UK's top websites in November 2023, warning them to make changes to comply with data protection law. On 31 January the ICO published a short update:

- Of the 53 organisations contacted, 38 organisations have changed their cookies banners to be compliant and four committed to reach compliance within the next month.

- Several others are working to develop alternative solutions, including contextual advertising and subscription models. The ICO will provide further clarity on how these models can be implemented in compliance with data protection law in the next month.

- The ICO is already preparing to write to the next 100 websites – and the 100 after that.

The ICO is developing an AI solution to help identify websites using non-compliant cookie banners and will run a ‘hackathon’ event early in 2024 to explore what this AI solution might look like in practice.

So what?

- Cookies are currently a key enforcement area for the ICO and the EU DPAs, so website operators should audit their use of cookies and their cookie banners to ensure that they comply with data protection law.

The future of web advertising: "Pay or OK" and the CJEU judgment on IAB Europe's transparency and consent framework (TCF)

"Pay or OK" and online choice architecture

Following on from the cookies update above, the "Pay or OK" approach to consent is a current hot topic, but it has been of interest to data protection lawyers for a few years. The expression refers to the practice of making website or app users choose between a paid-for subscription and free access on the basis that their personal data is processed for behavioural advertising purposes. In 2021 privacy activist Max Schrems' organisation noyb filed complaints against seven major German and Austrian news websites for using this model. In 2023, a German data protection authority (DPA) decided that, while "Pay or OK" was permissible in principle, the news site heise.de's use of it was not legal, because it did not provide the option to specifically consent to certain purposes.

More recently, following the urgent binding decision issued by the European Data Protection Board (EDPB) in October 2023 banning Meta from processing personal data for behavioural advertising on the legal bases of contractual necessity and legitimate interest, leaving only consent, Meta has switched to a "Pay or OK" model in the EU. As a result, a number of DPAs have written to the EDPB asking them to adopt a formal position on the model. In addition, 28 civil rights organisations (including noyb) have written to the EDPB asking them to issue a decision on the subject that protects privacy rights. The EDPB is expected to publish guidance in late March or early April. On 29 February, eight organisations from the network of the European Consumer Organisation BEUC filed a coordinated set of complaints to their national data protection authorities in France, Slovenia, Spain, Denmark, Norway, Greece and Czech Republic against Facebook and Instagram’s "pay-or-consent" model. And last but not least, on 18 March, 39 members of the EU Parliament called on Meta to stop the “Pay or OK” policy.

The Heise decision also flags another issue relating to consent to personal data processing for direct marketing: under GDPR the consent must be "specific". In the January 2024 issue of Data Diaries we referred to the joint position paper about Online Choice Architecture published by the ICO and the CMA in August 2023, which emphasises that consent must be specific, not bundled up as a condition of service. In January 2024, the ICO fined HelloFresh, in part because its consent statement relating to direct marketing was bundled with consent to receive free samples.

So what?

- Organisations using or considering using a "Pay or OK" model to address difficulties with relying on contractual necessity or legitimate interests should pay attention to the Heise decision and watch out for the EDPB's anticipated "Pay or OK" opinion.

- Ensure that any consent you rely on is specific. Bundled consent is more likely to be invalid than unbundled, granular consent options because it is unlikely to be specific, meaning that the processing may be unlawful. Organisations which rely on consent to process personal data should review their processes for obtaining consent, including the information provided to the individuals, to ensure that the consent is valid.

CJEU judgment on IAB Europe and its transparency and consent framework (TCF)

A recent judgment of the Court of Justice of the EU (CJEU) provides another example of the legal scrutiny that all aspects of behavioural advertising and adtech are currently receiving.

On 7 March, the CJEU issued a decision relating to auctions of personal data for advertising purposes.

IAB Europe is a non-profit association established in Belgium which represents undertakings in the digital advertising and marketing sector at European level. IAB Europe developed a Consent Management Platform (“CMP”) which is, according to IAB, compliant with the GDPR. In that context, the TCF provides a framework for large-scale processing of personal data and facilitates the recording of users’ preferences by means of the CMP. Those preferences are subsequently encoded and stored in a string composed of a combination of letters and characters referred to by IAB Europe as the Transparency and Consent String (‘the TC String’), which is shared with personal data brokers and advertising platforms participating in the OpenRTB protocol, so that they know to what the user has consented or objected. The CMP also places a cookie on the user’s device. When they are combined, the TC String and the cookie can be linked to that user’s IP address.

In 2022, the Belgian DPA challenged IAB Europe’s solution and ruled in particular that:

- the "Transparency and Consent String" (TC String) in IAB Europe's CMP, which stores users' preferences and is shared with data brokers and advertising platforms, shall be considered personal data; and

- IAB Europe was a data controller and did not fully comply with the GDPR.

It also imposed an administrative fine of €250,000 on the company.

The decision was appealed by the IAB and the Belgian Market Court decided to refer preliminary questions to the CJEU on how the concept of data controllership in the GDPR as it pertains to this case, is to be interpreted and on whether a TC String (a digital signal containing user preferences) can be considered as “personal data” under the GDPR.

The CJEU decided:

- The TC String contains information concerning an identifiable user and is therefore personal data. Where the information contained in a TC String is associated with an identifier (eg the user's device's IP address), the information may make it possible to identify the user.

- The CJEU stated that it did not matter that IAB Europe did not have direct access to the data processed by its members.

- IAB Europe must be regarded as a joint controller, as it appears to exert influence over data processing operations when users' consent preferences are recorded in a TC String and to determine, jointly with its members, the purposes and means of those operations.

- IAB Europe cannot be regarded as a controller in respect of data processing operations occurring after users' consent preferences are recorded, unless it has exerted an influence over the determination of the purposes and means of those subsequent operations.

As this is a preliminary ruling, the Brussels Court of Appeal must now decide IAB Europe's appeal based on the CJEU's judgment.

So what?

As well as providing guidance on the meaning of personal data and joint controllership, this decision demonstrates that all aspects of personalised advertising are currently a top priority for the data protection authorities. Organisations using any form of adtech should audit its use and consider the relevant law and guidance to ensure that such use is lawful.