The EU-U.S. Data Privacy Framework, the UK-U.S. Data bridge and the Legal Challenge to the DPF

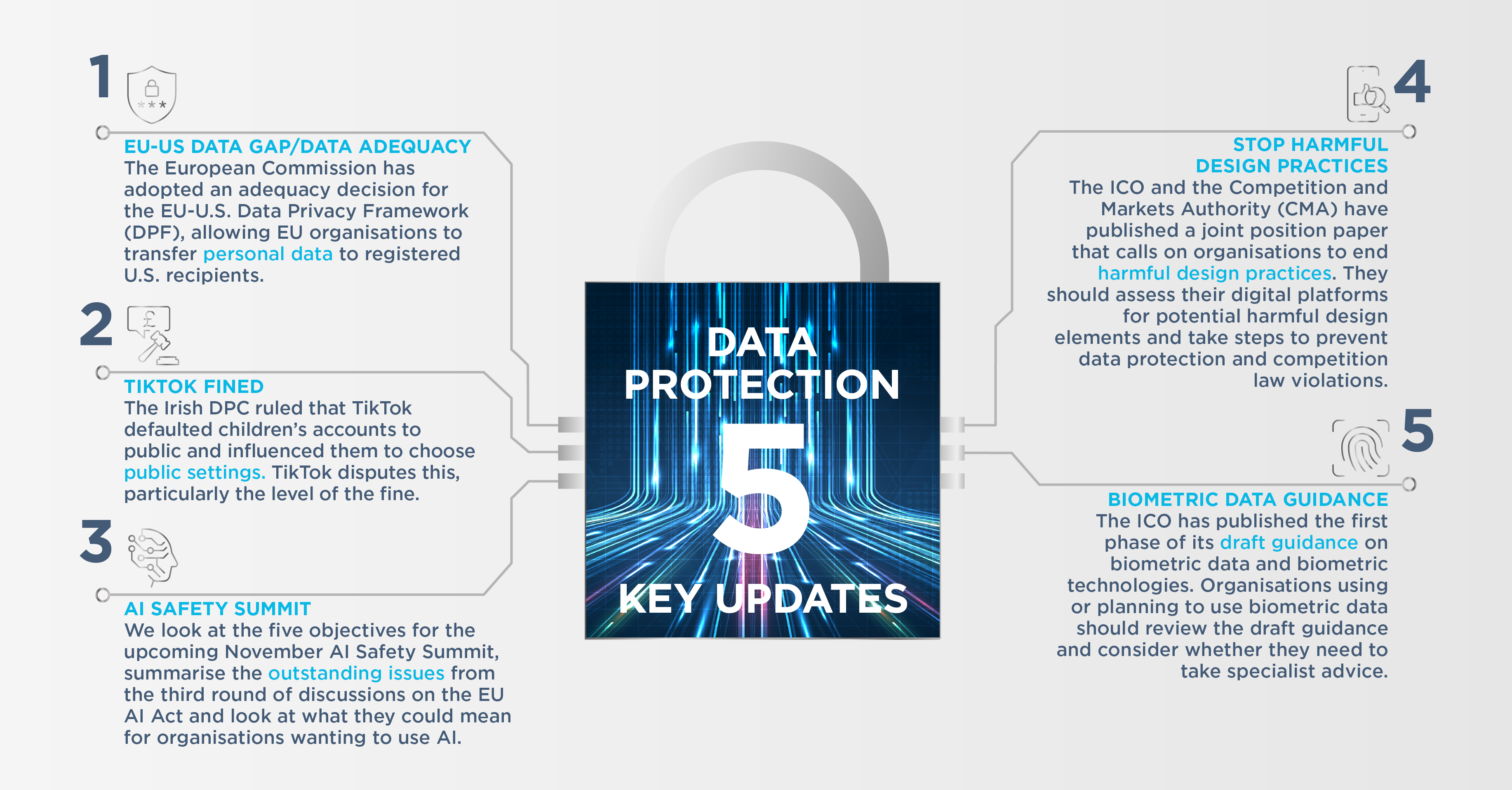

On 10 July 2023, the European Commission announced that it had adopted its adequacy decision for the EU-U.S. Data Privacy Framework (DPF), meaning that EU organisations can rely on it to transfer personal data to recipients in the United States who are registered on the Data Privacy Framework List. In the UK, on 21 September 2023 the Department for Science, Innovation and Technology (DSIT) announced that the UK-US data bridge, which forms an extension to the DPF, would come into force on 12 October 2023. This means that organisations in the UK can rely on the DPF to transfer personal data to recipients in the USA who are registered under the DPF and the UK Extension.

DSIT factsheet

DSIT published a factsheet setting out some key points about the data bridge:

- UK special category data which is not covered by the DPF must be appropriately identified as sensitive to US organisations when transferred under the UK-US data bridge. This applies to genetic data, biometric data and data concerning sexual orientation.

- When criminal offence data is shared under the data bridge as part of a human resources (HR) data relationship, US recipient organisations are required to indicate that they are seeking to receive such data under the DPF.

- When sharing criminal offence data outside an HR relationship, it should be indicated to the US recipient organisation that it is sensitive data requiring additional protection.

- Before sending personal data to the US, organisations must confirm that the recipient is certified with the DPF and the UK Extension and check the privacy policy linked to their DPF record. When transferring HR data, the US recipient must have highlighted this on their certification.

ICO Opinion

On 21 September, the ICO published an Opinion on the UK-US data bridge, flagging four risk areas. The first is the issue of sensitive information covered above. The others are the absence from the UK Extension of:

- protection for "spent" convictions under the UK Rehabilitation of Offenders Act 1974;

- rights to protection individuals from being subject to decisions based solely on automated processing; and

- the UK GDPR rights to be forgotten and to withdraw consent.

First challenge to the DPF

On 7 September French MP Philippe Latombe announced that he is challenging the validity of the EU-U.S. DPF. He has brought this challenge under Article 263 of the TFEU, which could be quicker than going via a referral from a member state court under Article 267 (as in Schrems I and II). In addition, Max Schrems' privacy organisation noyb had already announced that it will challenge the DPF.

Watch our webinar

Watch AG's webinar in which some of our data protection experts provide their insights into the DPF and its impact.

So what?

Organisations in the EU and the UK can now start to rely on the DPF plus the data bridge to transfer personal data to recipients in the USA that are appropriately registered. However, they need to ensure that they take the necessary additional steps if they are transferring HR, genetic, biometric, sexual orientation or criminal offence data to the US. In addition, due to the ongoing and threatened legal challenges to the DPF, they may wish to proceed with caution, for example by putting in place layered clauses to provide that standard contractual clauses (SCCs) will come into effect if the DPF is invalidated.

Irish DPC fines Tiktok €345 million for GDPR breaches in relation to children's data

The Irish Data Protection Commission (DPC) has published its decision in the TikTok case, which it adopted following the European Data Protection Board's (EDPB) binding dispute resolution decision. This decided that TikTok made children's accounts public by default and "nudged" children to select public settings rather than private ones, in breach of the principles of fairness, data protection by design and default, data minimisation and transparency. In addition to the fine, the decision includes a reprimand and an order requiring TikTok to bring its processing into compliance by taking the action specified within three months.

A TikTok spokesperson has stated that TikTok disagrees with the decision, particularly the level of the fine, and that it made changes before the investigation began. Accordingly, it appears likely that TikTok will appeal.

So what?

While this case relates in particular to the protection of children's personal data, the principles of fairness, data protection by design and default, data minimisation and transparency apply to all data processing. The ICO and CMA have recently published a joint position paper on harmful design in digital markets (see the separate item below) which addresses the concept of nudging individuals to select settings which do not protect their privacy.

AI Update: News about the AI summit plus an update on the draft EU AI act

The AI Safety Summit

On 25 September 2023, DSIT published the objectives of the AI safety summit to be held at Bletchley Park on 1 and 2 November. The summit will focus on two categories of risk: misuse risks and loss of control risks. It will aim to achieve five objectives, with a focus on "frontier AI", meaning highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today's most advanced models. These objectives are:

- a shared understanding of the risks posed by frontier AI and the need for action;

- a forward process for international collaboration on frontier AI safety, including how best to support national and international frameworks;

- appropriate measures which individual organisations should take to increase frontier AI safety;

- areas for potential collaboration on AI safety research, including evaluating model capabilities and the development of new standards to support governance, and

- showcase how ensuring the safe development of AI will enable AI to be used for good globally.

The draft EU AI Act

Turning to the draft EU AI Act, the third round of trilogue discussions took place on 2 and 3 October. It has been reported that the meeting made enough progress to raise hopes of finalising the Act at the next round on 25 October. The outstanding issues are:

- the definitions of "unacceptable risk" systems, which will be banned, and of "high risk" systems, which will be strictly regulated;

- the European Parliament's proposal to ban police use of real-time facial recognition systems, which is opposed by the Council, as the EU Member States are adamant that law enforcement and national security must remain under national control; and

- how to regulate foundation models that form the basis of multi-purpose systems such as ChatGPT.

So what?

With the rapid rise in the use of AI systems, organisations are keen to understand how this use will be regulated, and how this will vary in the UK, the EU and worldwide. At present, there is wide divergence between the more heavily-regulated approach set out in the draft EU AI Act and the lighter touch "pro-innovation" approach set out in the UK government's white paper published in March. The government has not yet published its response to the consultation on the white paper, although statements made by ministers indicate that its position may have changed. On 31 August the House of Commons Science, Innovation and Technology Committee published a report on the governance of AI encouraging the government to include a tightly-focused AI Bill in the next King’s Speech.

While we wait for the EU AI Act to be finalised and the UK government to make its position clear, the ICO has published guidance on how to comply with data protection law when using AI. This does not create any new rules, but explains how to apply existing data protection requirements, including the need for a lawful basis, fairness and transparency and the rules on automated decision-making. Organisations considering using AI systems to process personal data will usually need to conduct a DPIA (data protection impact assessment) to identify the risks and how to mitigate them.

The ICO/CMA position paper on harmful design

The ICO and the Competition and Markets Authority (CMA) have published a joint position paper called "Harmful design in digital markets: How Online Choice Architecture practices can undermine consumer choice and control over personal information". The paper calls on organisations to end harmful design practices and states that they should offer consumers a fair and informed choice when disclosing their personal information.

Some of the main design practices which could breach data protection laws include making it difficult for consumers to refuse personalised advertising by not giving an equal choice to "accept all" or "reject all" cookies, overly-complicated privacy controls which confuse consumers or cause them to disengage, the use of leading language to influence consumers to share personal information, pressuring consumers into signing up for discounts in exchange for personal information, and bundling choices together in a way which encourages consumers to share more data than they would otherwise wish to.

So what?

Organisations should consider whether their websites and apps may contain elements of harmful design and how to address any potential breaches of data protection and/or competition law. The ICO has stated that it will take formal enforcement action where necessary.

The ICO draft guidance on biometric data

The ICO has published the first phase of its draft guidance on biometric data and biometric technologies, which covers biometric data. The second phase, addressing biometric classification and data protection, will include a call for evidence early next year. The consultation on the draft guidance closed on 20 October.

Key definitions

The key aspects addressed by this guidance include definitions of biometric data and "special category biometric data". The ICO clarifies that "special category biometric data" refers to using biometric data for the purpose of uniquely identifying (recognising) someone. However, even if this is not your purpose, the biometric data you process may include other types of special category data. In addition, the guidance explains the term "biometric recognition", which refers to the use of biometric data for identification and verification purposes.

Data protection requirements

Regarding data protection requirements, biometric recognition systems are likely to pose a high risk to individuals' rights and freedoms, triggering the requirement for a Data Protection Impact Assessment (DPIA). Where special category biometric data is involved, explicit consent for processing is likely to be required. Organisations must offer a suitable alternative to people who choose not to consent. They must adopt a privacy by design approach and consider whether they can deploy privacy enhancing technologies. Lastly, organisations should consider the risks associated with accuracy, discrimination, and security when dealing with biometric data, and consider the use of encryption to mitigate the security risk.

So what?

Collecting and using biometric data is becoming increasingly common, for example using face or voice recognition or fingerprint or iris scanning for identity checks. This is likely to involve processing special category data using innovative technology, triggering the requirement to conduct a DPIA to identify any risks and how to mitigate them. Note that the ICO draft guidance does not create any new rules, but explains how the UK GDPR applies to the use of biometric data. Organisations using or planning to use biometric data should review the draft guidance and consider whether they need to take specialist advice. Note that, the ICO intends to publish a second phase of this guidance, which will cover biometric classification and data protection, early in 2024.