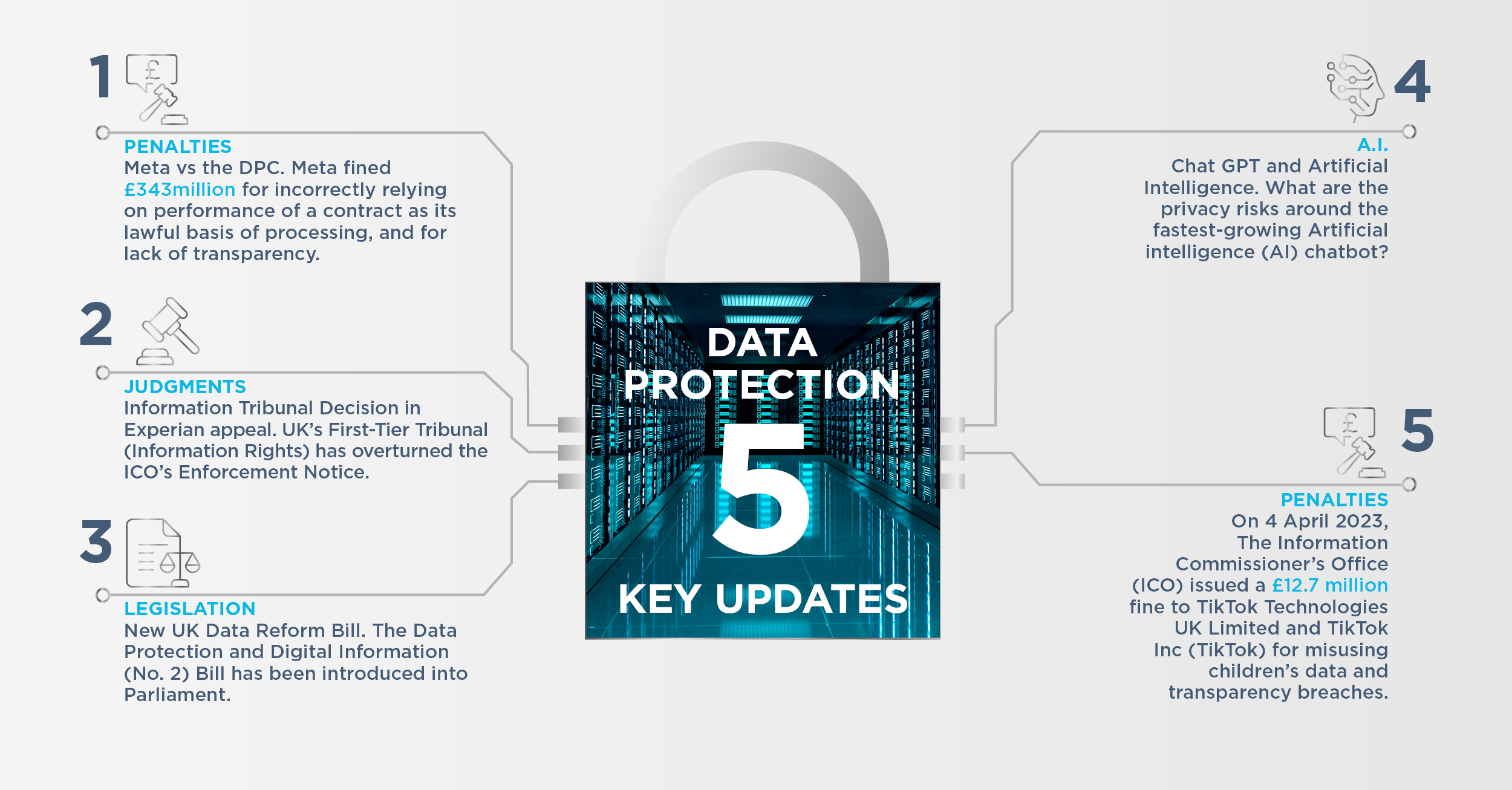

We've handpicked the top five stories and updates from the first quarter of 2023 that you need to know about.

DPC Meta Fine

The Irish Data Protection Commission (DPC) has fined Meta, the owners of Facebook, Instagram and Whatsapp, £343million for incorrectly relying on performance of a contract instead of consent as its lawful basis of processing, and for lack of transparency regarding some of its activities relating to behavioural advertising.

The DPC had originally backed the legal argument supplied by Meta that the contract approach did not breach GDPR but has decided to follow the binding determinations of the European Data Protection Board (EDPB), which concluded that consent to processing for behavioural advertising should have been obtained by Meta.

The decisions derive from two complaints made on 25 May 2018, the day on which the EU GDPR came into operation.

As Meta's EU base is in Dublin, the DPC is now seeking a court ruling against a further EDPB request to investigate all of Facebook and Instagram’s data processing operations, claiming that this is beyond the remit of the EDPB's powers to order.

So what?

This decision could significantly impact Meta's operations in Europe, although they plan to appeal so it may be that the decision is not implemented operationally by Meta pending the outcome of this appeal. It will have implications beyond Meta, for other social media platforms, businesses using those platforms and online advertising generally.

The decision indicates a split in the approach of the supervisory authorities to this particular issue, with only a relatively small number of authorities agreeing that consent should have been obtained. Others (including the DPC) agreed that Meta could potentially rely on performance of a contract, but ultimately it was the minority view which was upheld in the decision.

The DPC also raised transparency concerns regarding the lack of information in Meta's privacy notice regarding behavioural advertising. Similar transparency complaints have been made against Meta in the context of other decisions, so it remains to be seen if Meta will seek to change its approach on this.

Please get in touch with one of our team to discuss what these updates mean for your business.

Information Tribunal Decision in Experian appeal

The UK's First-Tier Tribunal (Information Rights) has substantially overturned the ICO's Enforcement Notice issued against Experian Limited over how it handles people's personal data.

Experian were issued an Enforcement Notice by the ICO in October 2020 following a two-year investigation. As part of this, Experian were ordered to make "fundamental changes" to how it handled personal data in connection with its postal marketing services, or stop this processing altogether. Experian appealed the Enforcement Notice to the Information Tribunal, who have now issued their decision.

While the Tribunal agreed with the ICO that improvements could have been made to Experian's privacy notices and that Experian could not rely on "disproportionate effort" to avoid communicating its notices to certain individuals impacted by the processing, it largely found in favour of Experian - in particular agreeing that Experian could rely on legitimate interests as its lawful basis for processing many of the activities considered.

The ICO is set to appeal the decision.

So what?

The decision is the culmination of an investigation that started in 2018, and will bring a sigh of relief to those who use Experian's postal direct marketing database products and for those who currently conduct postal marketing on the basis of legitimate interests, as this decision provides a positive indication that this is a compliant approach. Although now under appeal, this decision is an important indicator of the boundaries on transparency, consent and legitimate interests in the context of marketing.

The decision also provides some useful commentary on the application of the "disproportionate effort" exemption for making privacy notices available to data subjects: Experian has been ordered to provide a notice to data subjects whose data is collected from certain public sources including the electoral register but is not required to provide privacy notices in other cases for example when data is obtained from certain third parties.

It is however another blow to the ICO which has seen a number of penalty notices appealed and either settled or significantly reduced. The outcome of any appeal will have significant consequences for the data protection principles around customer / consumer engagement.

UK Data Reform Bill - No.2

The Data Protection and Digital Information (No. 2) Bill was introduced into Parliament by Technology Secretary Michelle Donelan in March 2023. This comes after the previous Data Reform Bill was put on pause in September 2022.

The data protection reforms in the new Bill aim to reduce the amount of paperwork British organisations need to complete and maintain to comply with the accountability principle under the UK GDPR. In particular, the Bill proposes several recognised legitimate interests which organisations can rely on to justify processing personal data without having to conduct a separate legitimate interests' assessment. Further, the reforms aim to reduce the need for data protection impact assessments and records of processing activities. A key change here from the previous Bill is the inclusion of "direct marketing" as a recognised legitimate interest.

Similarly to the last version of the Bill, the new Bill also looks to simplify some requirements regarding data protection impact assessments, appointments of Data Protection Officers and requirements for records of processing activities.

The new Bill also introduces some reforms to the Privacy and Electronic Communications (EC Directive) Regulations 2003 (PECR), in particular to lower the number of repetitive cookie pop-ups people see online and - in line with the first version of the Bill - the new Bill proposes to increase the maximum level of fine for breaches of these rules up to the current levels for breaches of the UK GDPR, namely up to 4% of global turnover or £17.5 million, whichever is greater.

So what?

The reforms to the UK GDPR will predominantly be relevant to UK-based organisations which are not caught by the geographical scope of the EU GDPR. The Bill is heralded as a tech friendly and business friendly approach to privacy, in line with UK Government's post Brexit agenda. However there are concerns that the new Bill will threaten UK adequacy as we move further away from GDPR, and that in practice the Bill, whilst making privacy more workable for SMEs, will create more complexity and resource challenges for larger international business.

The UK Government has confirmed that organisations which are already compliant with the current UK GDPR and the EU GDPR will not need to make any changes in order to comply with the reforms introduced in the Bill – so there is no requirement to run remediation exercises or change existing practices which are designed to comply with the current rules.

The increase in the level of fines proposed for breaches of the electronic direct marketing rules under the PECRs will likely have a significant impact, as this is an area where the ICO is quite active. This will significantly increase the risk associated with running direct marketing campaigns which potentially contravene the PECRs.

Chat GPT and Artificial Intelligence

ChatGPT, an artificial intelligence (AI) chatbot launched in November 2022 by Open AI, reached 100 million active users in January 2023. It is the fastest-growing consumer application ever launched, which can provide answers to questions and assist with tasks such as writing emails, essays and code. While its privacy risks are still being discussed by policy makers and stakeholders, some privacy regulators have already started investigations into OpenAI and how personal data has been handled. Meanwhile, the UK government published its white paper outlining its strategy to its regulation of AI.

On 14 March 2023, Open AI launched GPT-4, the latest version of ChatGPT which is able to process up to 25,000 words, almost eight times as many as the original ChatGPT. The software's fast growth and recent developments led numerous AI experts (i.e. Elon Musk and Apple co-founder Steve Wozniak) to issue an open letter, warning of potential risks of AI and ChatGPTs fast growth and called on AI developers to work with policymakers to develop a robust AI governance systems. Their worries may not be unfounded as the language model supporting ChatGPT requires large amounts of data to function and improve. OpenAI is said to have provided 300 billion words from internet-based sites such as books, articles, blogs and websites to fuel the tool but in doing so they have not requested individuals consent for their personal data to be taken or used in this way. There are currently no procedures in place for an individual to exercise their data subject rights under the UK and EU GDPRs to check what personal data OpenAI holds about them or to request for its deletion.

These circumstances have now rung the alarm bells for numerous data-protection regulators with the Italian data-protection regulators announcing that it would ban ChatGPT on 31 March 2023. It also announced that it would start an official investigation into OpenAI and whether it had complied with the GDPR. On 4 April 2023, the Canadian federal privacy commissioner similarly launched an investigation into OpenAI's collection and use of personal data, while the German commissioner for data protection announced that Germany may follow Italy's ban and investigation. In the UK, the ICO has so far taken a softer approach, with its Executive Director of Risk publishing a data protection guidance on 3 April 2023. As part of the guidance, developers of generative AI should ensure amongst other things that they have legal basis to process personal data, carry out DPIAs and comply with their obligations in relation to transparency, security and the purpose limitation.

Separately, the UK government has published its white paper on its "pro-innovation approach to AI regulation" on 29 March 2023, launching a public consultation with responses to be submitted by 21 June 2023.

So what?

Many businesses are keen to adopt AI-based technologies to help increase efficiency, but recent measures taken by data protection regulators underline that businesses should be aware that where such technologies involve the processing of personal data, data protection laws need to be considered and complied with. Lack of transparency is a big concern.

There are also ethical considerations to be contended with, as such technologies may inherit biases apparent in their underlying datasets and subsequent decisions made using AI-tools may result in discrimination against certain groups. The use of AI also needs to comply with the Equality Act 2010 and this should be considered as part of any broader risk assessments before such tools are adopted.

Following the publication of the White Paper, businesses should consider how the UK's approach to AI regulation may impact them and decide whether they want to submit responses. As countries and supranational organisations such as the EU are starting to draft policies in how to handle AI, businesses who keen to adopt AI-based technologies will have to ensure compliance.

TikTok fined £12.7 million by ICO

On 4 April 2023, The Information Commissioner's Office (ICO) issued a £12.7 million fine to TikTok Technologies UK Limited and TikTok Inc (TikTok) for using children's data without parental consent and not bring clear with people (including children) about how their data is used.

While this is one of the highest fines the ICO has issued, its notice of intent in September 2022 originally envisaged a possible fine of £27 million. The investigation found that a) TikTok had processed the data of children under the age of 13 without appropriate parental consent; b) had failed to provide proper information to its users in a transparent way; and c) processed special category data, without legal grounds to do so.

In its decision on 4 April 2023, the ICO decided not to pursue its provisional findings regarding the unlawful use of special category data. However, the regulator did concluded that between May 2018 and July 2020 that TikTok had provided its services to children under the age of 13 contrary to its terms, processed their personal data without parental consent, and had failed to provide proper information about how users' data was collected, used and shared in a transparent way. As a result, more than one million children under the age of 13 were on TikTok, with the company collecting and using their personal data. John Edwards, the UK's Information Commissioner stated that "TikTok should have done better. Our £12.7 million fine reflects the serious impact their failures may have had. They did not do enough to check who was using their platform or take sufficient action to remove the underage children that were using their platform."

So what?

Following a £20 million fine to British Airways and a £18.4 million fine to Marriott Hotels in 2020, this fine worth £12.7 million represents the ICO's third highest fine. Crucially, whilst regulators across Europe have fined other social media giants (Tik Tok has already been fined by the Dutch DPA for violating children's privacy rights) this is the first major ICO fine focusing on transparency and misuse of children's data. An aggravating factor in the decision appears to be that TikTok ought to have known about the level of use by under 13s and took no pro-active steps to remove or prevent access to under age users. This decision is a warning shot to all social media platforms in terms of both transparency and children's data. It is perhaps not surprising to see this decision on the back of the ICO's very clearly stated objectives to prioritise children's safety in the digital world. Particularly businesses that provide online services which are more likely to be accessed by children need to be aware of more stringent regulatory standards that were introduced by the Age Appropriate Design Code (commonly known as the Children's Code) in September 2020, also underlined by this decision.

The ICO's decision may also offer some insight into the regulator's view on the gravity for non-compliance with processing special category data under the UK GDPR. By not pursuing the original finding that TikTok processed special category data without legal grounds to do so, the ICO's proposed fine dropped by more than half. Although each case will vary depending on factual circumstances, the fine's decrease should serve as a reminder for businesses to assess whether they process special category data and ensure compliance with such processing.

Please get in touch with one of our team to discuss what these updates mean for your business.

Manuela Finger

Partner, Intellectual Property, Data Protection & IT, Commercial

Germany

Elisabeth Marrache

Partner, IS and Technology, Data Protection & Intellectual Property

France